In the pros and cons below (comparing LOSHv1 to V1),

I will ignore the actual fields (with one exception, for Kaspar ![]() ),

),

because that is very subjective, and really,

though Martin Haeuer could give reasoning why LOSHv1 has better fields.

pros

LOSHv1:

- Is a bit more strictly documented

- Is better machine-readability

- Has more projects, including all V1 projects (as of 2. August 2022)

- Has an RDF ontology and a mapping of manifests to that ontology

- Developers were/are “deeper into it”, as in:

they spent more hands-on time with tools and data, consistently, over years.

This applies both for tech devs and Martin,

who came up with the new set of fields.

RDF (point 4. above) opens a plethora of possibilities and advantages,

without any disadvantage,

as the more simple manifest representation also still exists,

and nobody has to write RDF by hand.

cons

LOSHv1:

- Has not been developed by a larger community

- Has (probably) had less people using/involved with it so far

- Does not further categorize source and export files into CAD, Electronics and so on

(though one could argue this is possible with the file extension) - Has no

sourceandexportfile data yet

This last point is an issue for Kaspar right now with LOSH,

and it is two fold:

okh-tool does not currently

map V1design-filesandschematicsto LOSHv1sourceandexportfiles.

This would be less then one day of work for me,

which I’d like to do, and will (hopefully) at some point.

This only matters for V1 projects, when converting them to LOSHv1,- For the LOSHv1 data directly krawl’ed from platforms.

would need adjustments in the krawler

(I am not sure if this is worked on or even done already by now)

The list of OSH file types with their properties

should help in both cases.

RDF pros

RDF is the de-facto Open Linked-Data standard.

This means, that if we do it right (by linking the ontology to commonly used RDF schemas)

That RDF ready consumers just need to link to the source of the data,

And can access fields without knowing anything about OKH, without knowing that the project uses OKH, and still use all the fields that are linked to the commonly used RDF schemas.

For example, it would make it easy (or even a no-op) for search engines,

to send the user accurate results including OKH projects,

or it might allow libraries to index OKH projects/user-manuals alongside their books.

RDF is also a DB-ready format, so when aggregating projects in RDF form,

one can add them to an RDF-DB, and run queries on it, using SPARQL (quite similar to SQL).

While it takes some time to wrap ones head around RDF at first,

really, it is very simple, which comes with a lot of benefits.

It are just triples of the form:

Subject --Property--> Object

e.g.:

Jake --hasA--> Dog

ExampleProject --isA--> Project

ExampleProject --name--> "Example Project"

ExampleProject --license--> CC-BY-SA

ExampleProject --licensor--> JohnDoe

JohnDoe --name--> "John Doe"

JohnDoe --email--> "john.doe@email.com"

This is almost as complex as it gets,

just that there are also name-spaces (based on URIs) involved in reality.

One can then run queries like:

- Give me all projects that have a GNU approved license and at least one image defined

- Give me all people that are authors of more then one project

- Count the number of uses of each license over all projects

RDF DBs are very simple to set up and use.

Links

- The main repository,

describing the standard in different formats,

and keeping track of a lot of issues found while using the standard - A list of the fields

- To setup a local RDF DB with the LOSH data, to then being able to query that data,

try the RDF-DB-tester. - All the latest LOSH data,

both the TOML manifest files (super easily convertible to JSON) and in RDF/Turtle.

This is also used by the RDF-DB-tester above. - The

okhCLI tool:- Converts manifest files from OKH v1 to OKH LOSH-v1, and

- validates both OKH v1 and OKH LOSH-v1 manifest files

- The JSON Schema,

useful for validation of manifests, generation of sample-manifests, generating WebUIs (and maybe more?) - A (hand crafted) WebUI for creating manifests (link missing!)

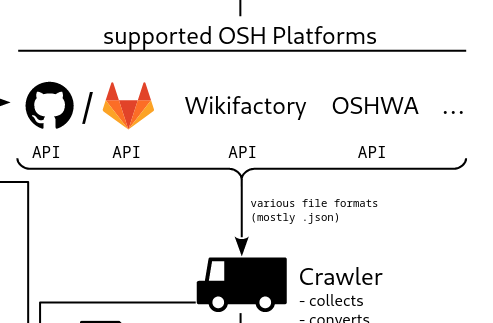

(An other one is in the making by dyne.org) - The crawler

gets projects from GitHub, Wikifactory, Thingiverse and OSHWA - A list of manually curated metadata files for OKH LOSH

- A very cheap Appropedia.org “crawler”,

while developped by LOSH, really is a pure OKH V1 tool. - A generator for a statistical report on LOSH data -

This piece is nothing to brag about, tech-wise -

yet it is a good example for what RDF queries are good for.

(The actual generated report is not available for download yet,

but can be requested from me if desired)

A Note on WikiBase

WikiBase (the software used by WikiData) is NOT RDF (based)!

You can not judge RDF based on any experience you had with WikiBase!

The only thing they have in common,

is that they both use the triple-store concept.

WikiBase can not be used for Linked-Data.

Gettign LOSH data onto WikiBase took two devs weeks of full-time,

very frustrating work stretched over months.

The same, much more stable and feature rich, on an RDF DB,

took one dev 30min (without ever before having used an RDF DB).

Never use WikiBase.